Abstract

The outbreak of jellyfish blooms poses a serious threat to human life and marine ecology. Therefore, jellyfish detection techniques have earned great interest. This paper investigates the jellyfish detection and classification algorithm based on optical images and deep learning theory. Firstly, we create a dataset comprising 11,926 images. A MSRCR underwater image enhancement algorithm with fusion is proposed. Finally, an improved YOLOv4-tiny algorithm is proposed by incorporating a CBMA module and optimizing the training method. The results demonstrate that the detection accuracy of the improved algorithm can reach 95.01%, the detection speed is 223FPS, both of which are better than the compared algorithms such as YOLOV4. In summary, our method can accurately and quickly detect jellyfish. The research in this paper lays the foundation for the development of an underwater jellyfish real-time monitoring system.

Similar content being viewed by others

Introduction

With the rapid development of deep-sea exploration technology, people pay increasing attention to the exploration and utilization of marine resources, which is crucial for acquiring, collecting, and rationally using navigational information1.

The Bohai sea, situated in eastern China, is rich in marine resources. However, the local economy and ecosystem have been significantly impacted by periodic catastrophes caused by marine creatures such as red tide, green algae, and jellyfish floods2,3. Among these calamities, jellyfish blooms have garnered international attention as a prominent maritime ecological concern. Nonetheless, real-time jellyfish detection remains challenging due to the complex marine environment and the immature related detection technologies4.

In the past, oceanographers typically fished jellyfish by collecting water samples or using simple trawl technique. Subsequently, these jellyfish specimens were brought to laboratories for manual identification. While this approach allows for effective research and statistical analysis of jellyfish species, it requires significant human and material resources. In an attempt to address these challenges, some scholars have utilized mathematical modeling and biological detection techniques for jellyfish detection. However, these methods possess notable limitations and are unable to provide real-time detection5,6,7. Furthermore, they can inadvertently harm living beings, making it challenging to satisfy the demands of real-time detection and identification8.

The advancement of underwater optical and acoustic imaging has led to a high degree of jellyfish monitoring. Sonar and optical imaging are relatively mature among the various jellyfish detection technologies9,10.

Sonar imaging monitors jellyfish by transmitting and receiving sonar signals, but the resulting images have low-resolution and cannot distinguish between species11. On the other hand, Optical imaging technology has been widely used in jellyfish monitoring compared to sonar imaging. This is primarily due to its high image resolution, non-contact, real-time imaging, and species identification advantages. In addition, as jellyfish are slow-moving creatures, obtaining clear images through optical equipment is relatively straightforward.

An innovative target monitoring technique is the Convolutional Neural Network (CNN), which has progressively become a novel monitoring approach to study marine life. The CNN-based target identification algorithm is primarily split into two groups: regression-based algorithms like YOLOv4-tiny and Faster R-CNN, which are based on region recommendations.

The research first employs the YOLOv4-tiny since the Faster R-CNN has the issue of low real-time. However, when the YOLOv4-tiny algorithm is used, there is a problem with poor accuracy.

The organization of the rest of this article is as follows. "Related research and contributions" section introduce the related works on jellyfish detection and their limitations. "Dataset preparation and preprocessing" section presents the establishment of the dataset and underwater image enhancement algorithm. Section "Improved YOLOv4-Tiny Jellyfish Detection Algorithm" describes the jellyfish classification method based on the improved YOLOv4-tiny algorithm. "Experiment and result analysis" section shows experimental results to validate the effectiveness and robustness. The conclusion is provided in "Conclusions" section.

Related research and contributions

This section introduces the existing work related to the detection and monitoring of jellyfish and then presents the paper’s main contributions.

Related research literature

Underwater image processing is a necessary means to improve detection accuracy. Therefore, in this paper, we first introduce recent research on underwater image processing. In 2018, Lu et al. proposed the guided image filtering for contrast enhancement method to improve the quality of underwater images. However, the guided filter they used could only be applied to grayscale images, and therefore performed poorly in color restoration12.

In 2021, Liu studied underwater image restoration algorithms based on the dark channel prior method, which improved the restoration effect to some extent. However, due to the large number of parameters, the algorithm's robustness was poor13.

In 2022, Li et al. proposed the Dark Channel and MSRCR Algorithm Combined method to achieve underwater image dehazing and enhancement, but the method had poor scene applicability14.

In 2022, Zhou et al. proposed an algorithm for automatic color correction of underwater images, which solved the color cast caused by the attenuation difference of different color channels in underwater images and could adapt to various underwater environments15.

In 2023, Zhou et al. further proposed the multi-interval sub-histogram perspective equalization method for underwater enhancement, which achieved contrast enhancement of underwater images through adaptive interval partitioning and histogram equalization, with excellent image restoration effects16.

Furthermore, we introduce related research on jellyfish detection technology. Due to the harm and research value of jellyfish, researchers have long used various technologies, including acoustic, optical, and remote sensing, to search for jellyfish. In 1994, Davis et al. designed a submarine plankton video recording system. Rich visual information, quick recording, and the capacity to capture in-depth jellyfish movement are all benefits of the technology17.

In 2006, Houghton et al. recorded jellyfish movement characteristics and distribution using aerial photography technology. However, this approach could only observe large-sized jellyfish near the sea’s surface18.

In 2015, Donghoon Kim et al. developed an autonomous jellyfish detection and cleaning system. At the same time, the team also proposed a jellyfish detection algorithm based on drone photography. However, it cannot identify jellyfish19.

In 2016, Seonghun Kim et al. investigated jellyfish’s spatial and vertical distribution by acoustic and optical methods, but this method had limitations in monitoring tiny jellyfish20. Hangeun Kim et al. put forward a drone detection system for jellyfish. The design captured the movements of jellyfish on the sea surface and recognized them through deep learning. However, it was limited to the Aurelia aurita21.

In 2017, Jungmo Koo and colleagues developed a system seeking out jellyfish distribution by crewless aerial vehicles. They employed a deep neural network that demonstrated high precision and fast speed in accurately identifying jellyfish. Nonetheless, it is noteworthy that this approach can solely discriminate a singular jellyfish species22. Martin-Abadal et al. used neural network to design a Jelly monitoring system for the automatic detection and quantification of various jellyfish types, as well as enabling long-term monitoring of their presence. However, the system's applicability is constrained23.

In 2018, French et al. implemented underwater imaging technology and neural network to monitor and classify jellyfish. The accuracy of classification reached up to 90%, suggesting that the system can serve as an effective tool for predicting jellyfish outbreaks. However, the system is limited to detect individual jellyfish outbreaks.

In 2020, a novel technique for the automated detection and quantification of jellyfish was developed by Martin Vodopiveca et al. This approach enables the continuous monitoring of jellyfish, while simultaneously assessing the accuracy of manual counting24. Through the use of optical imaging and automated image analysis, the algorithm demonstrates the feasibility of identifying jellyfish. However, the current implementation remains limited to offline recognition and is not yet capable of real-time monitoring.

In 2021, Chang Qiuyue et al. of Yanshan University proposed an improved YOLOv3 algorithm, which can achieve real-time detection and identify seven jellyfish species. But its speed and accuracy need to be improved25.

In the past, although a series of studies have been carried out on jellyfish detection using acoustics and optics combined with deep learning theory, the research on jellyfish detection is still in the primary stage. So, further study and improvement are needed to improve detection accuracy, speed, and species identification.

Contributions

The main contributions of this paper are as follows:

-

1.

A dataset containing seven species of jellyfish and fish is established in "Dataset preparation and preprocessing" section, including Cyanea purpurea, Rhizostoma pulmo, Phacellophora camtschatica, Agalma okeni, Aurelia aurita, Phyllorhiza punctata, Rhopilema esculentum, and fish, a total of 11,926 images.

-

2.

An underwater image enhancement algorithm is proposed based on Multi-Scale Retinex with Color Restoration (MSRCR) and an underwater image fusion algorithm, to solve severe blurring and color degradation of underwater images.

-

3.

An attention mechanism module is added in the feature extraction network of the YOLOv4-tiny algorithm, to improve the feature extraction ability and strengthen the ability to identify small and occluded targets.

-

4.

Mosaic enhancement, which enhances the data at the network's input when training the network, is added. Meanwhile, label smoothing and cosine annealing learning rate training methods are applied to improve the overall detection effect of the algorithm.

Dataset preparation and preprocessing

Dataset preparation

The dataset used in our study comprises jellyfish images obtained through two sources: Crawler technology and our own lab. The jellyfish to be recognized are categorized into eight classes, including disruptor fish and seven jellyfish species, which are Cyanea purpurea, Rhizostoma pulmo, Phacellophora camtschatica, Agalma okeni, Aurelia aurita, Phyllorhiza punctata, Rhopilema esculentum, and fish. Among them, C. purpurea, R. pulmo, and P. camtschatica are derived from the public dataset by Miguel Martin-Abadal et al.23. The online websites were utilized for obtaning the dataset on A. okeni, P. punctata, the majority of A. aurita, and fish. Additionally, data on R. esculentum and some A. aurita were collected in our lab. The dataset consists of a total of 2141 photos. Figure 1 displays the images of various jellyfish.

Supervised data augmentation methods are employed for dataset augmentation. The dataset is divided into the training set, the verification set, and the test set. The distribution of the dataset is shown in Table 1.

Underwater image preprocessing

First, we use MSRCR combined with an underwater image fusion method to address the issue of color deterioration and blurring of underwater images acquired by optical equipment. The MSRCR, known for its ability to enhance color in input images26, is employed as the initial technique in our new algorithm. The second method focus on image denoising and contrast enhancement27,28,29. Subsequently, the output images generated by these two methods are merged using an underwater fusion algorithm, resulting in a final image with vibrant color, sharp contrast, and distinct texture30. For a more comprehensive understanding of the process, please refer to Fig. 2, which illustrates the detailed steps of this new algorithm.

In our study, we employ five different algorithms to process the original image. These algorithms include the dark channel prior defogging13, contrast enhancement proposed by Lu et al.12, MSRCR26, underwater image fusion, and our suggested improved underwater image enhancement algorithm. The resulting images are subsequently evaluated using four evaluation parameters: Entropy29, UCIQE31, UIQM32 and EOG33. Figure 3 visually presents the effects achieved by applying five algorithms. The evaluation results are given in Table 2.

It can be seen from Table 2 that the Entropy, UCIQE, and EOG reach their maximum when the improved algorithm is applied. Moreover, the improved algorithm can also fulfill the demands of exhibiting the target items and increasing the color of underwater images. Above all, the improved algorithm presented in this section demonstrates excellent performance and could be applied to enhance the optical images utilized in the jellyfish detection system. Figure 4 shows the results processed by five algorithms.

Some conclusions can be derived from Fig. 4a–f: The dark channel prior defogging algorithm produces limited color recovery and discernible changes in image texture details. The contrast enhancement method primarily focuses on recovering image texture details, with subpar color recovery. The MSRCR algorithm performs well in image color recovery, but compromises the texture information. While the contrast of the picture backdrop is somewhat increased, the color recovery impact of the fusion algorithm is inferior to that of MSRCR. The new algorithm, on the other hand, produces the most satisfactory overall result. It achieves a mild yet effective color recovery, exact image texture details, and high contrast.

Improved YOLOv4-Tiny Jellyfish Detection Algorithm

The YOLO algorithms have gained widespread popularity in target detection applications. Among them, the YOLOv4-tiny algorithm has fast detection speed, relatively high detection accuracy, a simple network model, and low hardware needs34,35,36. The YOLOv4-tiny has a significantly faster recognition speed than YOLOv4, but its accuracy has declined37. Therefore, this work will adopt two ways to enhance the YOLOv4-tiny algorithm's accuracy and make it compliant with the criteria of jellyfish detection accuracy and speed. Specific improvements are: (1) Add the attention mechanism module to improve the feature extraction ability of the network and strengthen its recognition of obscured and tiny targets. (2) The mosaic data enhancement is used at the network's input when training the network. To enhance the overall detection impact, two training techniques are simultaneously introduced: label smoothing and the cosine annealing learning rate.

Add CBAM

CBAM is an attention mechanism that combines space and channel38. Compared with the mechanism that only focuses on one channel attention mechanism, the hybrid attention mechanism can achieve better results. Therefore, the hybrid attention mechanism is introduced to make the neural network concentrate more on the target areas that contain essential information and suppress irrelevant information, thereby improving accuracy.

The YOLOv4-tiny obtains feature information through the neural network, and there is no feature extraction step. As a result, it is simple to overlook tiny targets and obscured objects. In the paper, the CBAM is added after upsampling. The attention mechanism can weight the feature data of the target objects with dynamic weight coefficients, thus improving the network's ability to pay attention to the target objects, solving the problem of small targets and occluded objects being ignored. Figure 5 depicts the network topology for adding the CBAM.

Improvements in training methods

To improve the detection accuracy further, we will introduce the mosaic data enhancement, cosine annealing learning rate and label smoothing in this section.

Mosaic is a form of data enhancement used before model training. Its purpose is to merge four random images into a single new image, thereby enriching the background of the detection target. This process enhances the variety and informational content of the input images, while also reducing overfitting. The steps involved in the mosaic data enhancement are as follows:

-

1.

Read four random images;

-

2.

Crop, zoom, flip, and color gamut changes for four images, respectively;

-

3.

The images from the second step are stitched to obtain images in the specified size range.

The mosaic enhanced images are shown in Fig. 6.

Cosine annealing learning rate can reduces the learning rate using a cosine function. Initially, the model enters the training state with a gradually decreasing function value. This faster decrement leads to accelerated convergence of the learning rate. Subsequently, the learning rate gradually decreases again to prevent overshooting the optimal point. This approach often yields favorable results.

The majority of jellyfish have long tentacles and umbrella-shaped heads, with striking similarities. Because of this, manual labeling will inevitably result in mistakes that will impact on the final predictions. Label smoothing prevents over-trust by assuming that labels may be incorrect during training. In this chapter, label smoothing is introduced to improve accuracy. The smoothing coefficient is 0.01.

Comprehensively improved algorithm

Combining the improved network and training method, a comprehensively improved algorithm is obtained, and the structure is depicted in Fig. 7.

Experiment and result analysis

Experimental process

-

1.

Experimental method

Seven tests are carried out to verify the improved algorithm and training method. The seven groups of experiments are as follows: (1) the original YOLOv4 algorithm; (2) the original YOLOv4-tiny algorithm; (3) the improved YOLOv4-tiny network adding CBMA; (4) the improved network and mosaic enhancement; (5) the improved network and cosine annealing learning Rate; (6) the improved network and label smoothing; (7) Comprehensively improved algorithm (including the improved network, mosaic enhancement, cosine annealing learning rate, and label smoothing).

The network is trained separately with the original data and the enhanced data in "Dataset preparation" section. The train, valid and test sets is set as Table 1.

-

2.

Parameter settings

The hyperparameter settings are shown in Table 3.

Table 3 Hyperparameter settings.

Algorithm comparison

To demonstrate the effectiveness and superiority of our proposed method, we compared it with several classical and state-of-the-art methods, including YOLOV4, YOLOV5, YOLOV6, YOLOV7, YOLOV8, and our methods. In order to compare the different algorithms more effectively and intuitively, we compared their complexity and accuracy, and the results are shown in Table 4. Layers, parameter quantity, and FPS reflect the complexity of the algorithm. Lower values of layers and parameter quantity indicate simpler architecture with fewer generated parameters and lower complexity, while higher FPS indicates faster processing speed. mAP and F1 reflect the accuracy of the algorithm, with higher values indicating better performance. As shown in Table 4, our proposed algorithm maintains a lightweight structure while achieving high detection performance.

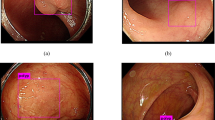

Figure 8 shows the results of different methods trained on the same dataset in the comparative experiment of jellyfish detection. From the Fig. 8, it can be analyzed that regardless of the method used, both false positives and false negatives occurred in the detection of multiple jellyfish images, which proves that jellyfish detection is a challenging task. Among the detection results, the proposed algorithm has the highest confidence but with significant false negatives. YOLOv7 detected the most jellyfish and maintained a high level of confidence. YOLOv5, YOLOv6, and YOLOv8 did not perform well in jellyfish detection. Therefore, we can consider the YOLOv7 method as a deadline for jellyfish detection, while other methods still need improvement.

Ablation experiment process

The experimental data are quantitatively analyzed in this part using five evaluation indices, and the findings are as follows:

-

1.

Average precision (AP) value analysis

Tables 5 and 6 are the results of the above seven experimental methods.

Table 5 The AP results of seven algorithms with original dataset. Table 6 The AP results of seven algorithms with enhanced dataset. Tables 5 and 6 indicate that the utilization of data enhancement has resulted in improved Average Precision (AP) values for most jellyfish species. In addition, the comprehensively improved algorithm achieves a detection accuracy over 95% for most jellyfish types. The mean average precision (mAP) of the seven algorithms is listed in Table 6.

It can be seen from Table 7 that, except for the original YOLOv4-tiny algorithm, the mAP of the other six algorithms is higher than the values without data enhancement, proving the effectiveness of data enhancement. Comparing the mAP values obtained by various methods, it can be seen that the mAP of the YOLOv4-tiny algorithm after data enhancement is 1% lower than that of the YOLOv4 algorithm, this indicates a reduction in the feature extraction capability of the YOLOv4 network due to its simplified structure. Table 6 further demonstrates that the improved network structure leads to a 1.59% increase in mAP compared to YOLOv4-tiny and a 0.59% increase compared to YOLOv4, Among the different enhancements, mosaic enhancement produces the most significant effect, surpassing the impact of cosine annealing learning rate and label smoothing.

Table 7 The mAP values of seven algorithms. The mAP can reach 95.01% utilizing data enhancement and the improved algorithm, which is 2.55% higher than the original YOLOv4-tiny algorithm, illustrating that the improved algorithm has the highest detection accuracy. The bold data in the table is the mAP value of the comprehensively improved algorithm.

-

2.

FPS analysis

Table 8 displays the FPS values for the seven algorithms. From Table 8, we can see that the detection speed of the YOLOv4-tiny algorithm can reach 248 FPS, which is nearly five times higher than that of the YOLOv4 algorithm with only 43.9 FPS. When the average accuracy is considered, it is clear that YOLOv4-tiny will sacrifice a small amount of precision to increase detection speed. The detection speed of the comprehensively improved algorithm can reach 223 FPS, which is a little different from the original YOLOv4-tiny algorithm. As a result, the comprehensively improved algorithm improves the detection accuracy while sacrificing a bit of the detection speed.

Table 8 The FPS of seven algorithms. -

3.

Precision analysis

Table 9 shows the average precision of each algorithm. Table 9 shows that the accuracy of the comprehensively improved algorithm after the data enhancement can reach 92.56%, which can satisfy the detection criteria.

Table 9 The precision of seven algorithms. -

4.

Recall analysis

The average recall of the seven algorithms introduced in this chapter is shown in Table 10. When processing the enhanced dataset, we can see that the recall rate of the comprehensively improved algorithm can reach 89.69%, which is the highest figure among all methods. The bold data in the table are the evaluation parameters obtained by the comprehensively improved algorithm. The mAP and Recall values of the seven methods both before and after data improvement are summarized in Fig. 9.

Table 10 The recall value of seven algorithms. -

5.

F1 score analysis

The comparison of the F1 Score of the seven algorithms is shown in Table 11. The greater the F1 Score, the better the network performance since it indicates how well the model can balance accuracy and recall. It can be seen that the F1 Score of the seven algorithms all distribute around 0.90, and the comprehensively improved algorithm has the most considerable F1 Score.

Table 11 The F1 scores of seven algorithms. The seven algorithms are evaluated in this section using the aforementioned objective assessment indices of accuracy, FPS, precision, recall, and F1 score. The results show that YOLOv4-tiny dramatically improves the detection speed compared to the YOLOv4 algorithm while sacrificing a little precision. Its detection speed is six times that of YOLOv4, and other evaluation indicators are almost equal. The detection accuracy of the comprehensively improved algorithm has been improved, and the detection speed can be maintained above 220 FPS, which can meet the demands for rapid testing. The comprehensively improved algorithm has 95.01% accuracy and 223 FPS, slightly less than the improved YOLOv3 method previously proposed in our lab, which had 95.53% accuracy and 52.53 FPS detection speed39. The detecting speed has, however, greatly increased and is now four times faster than the prior method. Through the objective evaluation and analysis, it can be seen that the comprehensively improved algorithm has the best overall impact. It quantitatively illustrates the effectiveness of the comprehensively improved algorithm we proposed. Figure 10 shows the image results processed by different methods in the ablation experiment.

Figure 10 Figure 10a illustrates that the YOLOv4-tiny algorithm mistakenly detects the right jellyfish as A. aurita and identifies the jellyfish in the left corner as both A. aurita and P. punctata. In Fig. 10b, the improved network correctly detects two P. punctata but overlooks the right jellyfish. Figures 10c,e,f display three evident P. punctata correctly identified by all six algorithms. However, the smaller and farther P. punctata are not recognized by any of the algorithms. Considering the confidence level, the comprehensively improved algorithm achieves confidence levels of 0.99, 0.99, and 0.95, respectively, with more accurate box positioning compared to other methods. Hence, the improved algorithm exhibits the best detection performance.

Figure 11 shows the jellyfish video image captured in the field. The FPS value is displayed in the upper left corner, while the name and number above the bounding box indicate the accuracy of jellyfish species identification. The average FPS of the entire video is approximately 20, which is due to the large image resolution of 2448 * 2018.

Based on the analysis of experimental data, visualization effects, and performance metrics such as mAP, FPS, precision, recall, and F1-score, it is evident that the improved algorithm proposed in this paper outperforms the other algorithms. The improved algorithm achieves the best results in terms of mAP, FPS, precision, recall, and F1-score, indicating its superior detection capabilities. The experimental analysis also demonstrates that the improved algorithm produces the best detection effects for jellyfish examples.

The high FPS value indicates the algorithm's ability to perform rapid detection, which is crucial for real-time applications. The high F1-score suggests that the network structure is stable, and the algorithm achieves a balanced performance in terms of precision and recall.

Overall, the comprehensively improved algorithm presented in this paper enhances the accuracy of jellyfish detection while ensuring fast and efficient identification. It meets the requirements for rapid and accurate identification of jellyfish.

Conclusions

This study addresses the demand for jellyfish detection by taking several important steps. Firstly, a new dataset containing a large number of images from seven jellyfish species is established, including both publicly available data and data collected in the laboratory. This dataset serves as a valuable resource for further research and development in the field of jellyfish detection. Next, to improve the quality of underwater images and enhance jellyfish detection, this paper proposes a MSRCR underwater image enhancement algorithm with fusion, and demonstrates the effectiveness and superiority of the proposed method through various objective image evaluation parameters. Futhermore, an improved YOLOv4-tiny jellyfish detection algorithm is proposed. This algorithm combines mosaic data augmentation, cosine annealing, and label smoothing methods for weight training, and incorporates CBAM modules to improve feature extraction capabilities, achieving both accuracy and real-time performance in jellyfish detection. Multiple evaluation results from YOLOv4 series ablation experiments and YOLO series comparative experiments demonstrate the superiority and practicality of the proposed algorithm, meeting the requirements for real-time and accurate detection of jellyfish.

While the proposed algorithm shows promising results, there are still challenges to overcome. These include slow processing speed for high-resolution videos, difficulties in handling multiple overlapping jellyfish scenes, and potential missed detections. Future research efforts will focus on improving the algorithm's ability to handle jellyfish overlap and increasing the processing speed for high-resolution images.

Overall, this study provides a template for jellyfish detection, and our proposed algorithm demonstrates good robustness and detection performance, with certain application and reference value in practical engineering detection. It highlights the research potential of the YOLO-tiny series method in jellyfish detection and sets the stage for future advancements in the field.

Data availability

One dataset is available in [Martin-Abadal, M. Jellyfish Object Detection] repository, https://github.com/srv/jf_object_detection; An additional portion of the dataset analyzed during the current study is available from the corresponding authors upon reasonable request.

References

Jichang, G. et al. Research progress of underwater image enhancement and restoration methods. J. Image Graph. 22(3), 0273–0287 (2017).

Song, S. Key process, mechanism and ecological environment effect of jellyfish outbreak in China’s offshore waters. China Sci. Technol. Achiev. 17(19), 12–13 (2016).

Zhaoyang, C. & Peimin, H. Ocean eutrophication trend and ecological restoration strategy in China. Science 65(4), 48–52 (2013).

Dongfang, Y. et al. Application of new technology in jellyfish monitoring. Ocean Dev. Manag. 31(4), 38–41 (2014).

Moon, J. H. et al. Behavior of the giant jellyfish Nemopilema nomurai in the East China Sea and East Japan Sea during the summer of 2005: A numerical model approach using a particle-tracking experiment. J. Mar. Syst. 80(1), 101–114 (2009).

Fang, Z. et al. Research progress on the formation mechanism, monitoring and prediction, prevention and control technology of jellyfish disasters. Oceans Lakes 48(6), 1187–1195 (2017).

JianYan, W. et al. Molecular identification and detection of moon jellyfish (Aurelia sp.) based on partial sequencing of mitochondrial 16S rDNA and COI. J. Appl. Ecol. 24(3), 847–852 (2013).

Cong, L. Present situation and prospect of jellyfish disaster research in China. Fish. Res. 40(2), 156–162 (2018).

Gorpincenko, A. et al. Improving automated sonar video analysis to notify about jellyfish blooms. IEEE Sens. J. 21(4), 4981–4988 (2020).

Zhang, Y. et al. A method of jellyfish detection based on high resolution multibeam acoustic image. In MATEC Web of Conferences, Le Mans, France, 28–28 June 2019.

Gustavo, A. C., Hermes, M. & Adrian, M. Acoustic characterization of gelatinous-plankton aggregations: Four case studies from the Argentine continental shelf. ICES J. Mar. Sci. 60(3), 650–657 (2003).

Lu, Z. et al. Effective guided image filtering for contrast enhancement. IEEE Signal Process. Lett. 25(10), 1585–1589 (2018).

Liu, Z. Research on Underwater Image Restoration Method Based on Dark Channel Apriori Algorithm (Dalian Maritime University, 2021).

Li, Y., Yu, H. & Liang, T. Research on image defogging by combining dark channel and MSRCR algorithm. Mod. Comput. Sci. (22), 24–30 (2022–28).

Zhou, J. et al. Auto color correction of underwater images utilizing depth information. IEEE Geosci. Remote Sens. Lett. 19, 1504805 (2022).

Zhou, J., Pang, L. & Zhang, W. Underwater image enhancement method by multi-interval histogram equalization. IEEE J. Oceanic Eng. 48(2), 474–488 (2023).

Davis, C. S. et al. Microaggregations of oceanic plankton observed by towed video microscopy. Science 257(5067), 230 (1992).

Houghton, J. D. R. et al. Developing a simple, rapid method for identifying and monitoring jellyfish aggregations from the air. Mar. Ecol. Prog. Ser. 314(1), 159–170 (2006).

Kim, D. et al. Development and experimental testing of an autonomous jellyfish detection and removal system robot. Int. J. Control Autom. Syst. 14(1), 312–322 (2016).

Seonghun, K. et al. Vertical distribution of giant jellyfish, Nemopilema nomurai using acoustics and optics. Ocean Sci. J. 51(1), 59–65 (2016).

Kim, H. et al. Development of a UAV-type jellyfish monitoring system using deep learning. In International Conference on Ubiquitous Robots & Ambient Intelligence, Seoul, Korea, 28–30 October 2015.

Jungmo, K., Sungwook, J. & Hyun, M. A. Jellyfish distribution management system using an unmanned aerial vehicle and unmanned surface vehicles. In Proceedings of the 2016 IEEE International Underwater Technology Symposium, Busan, Korea (South), 21–24 February 2017.

Martin-Abadal, M. et al. Jellytoring: Real-time jellyfish monitoring based on deep learning object detection. Sensors 20(6), 1708 (2020).

Martin, V. et al. Towards automated scyphistoma census in underwater imagery: A useful research and monitoring tool. J. Sea Res. 142, 147–156 (2018).

Qiuyue, C. Research on jellyfish detection algorithm based on convolutional neural network. Master's Thesis in Electronic Science and Technology (Yanshan University, 2021).

Rahman, Z., Jobson, D. J. & Woodell, G. A. Multi-scale retinex for color image enhancement. In Proceedings of 3rd IEEE International Conference on Image Processing. 19–19 September 1996.

Foster, D. H. Color constancy. Vis. Res. 51(7), 674–700 (2011).

Zimmerman, J. B. et al. An evaluation of the effectiveness of adaptive histogram equalization for contrast enhancement. IEEE Trans. Med. Imaging 7(4), 304–312 (1988).

Bjørke, J. T. Framework for entropy-based map evaluation. Am. Cartog. 23(2), 78–95 (2013).

Ancuti, C. et al. Enhancing underwater images and videos by fusion. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, 16–21 June 2012.

Yang, M. & Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 24(12), 6062–6071 (2015).

Panetta, K., Gao, C. & Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Oceanic Eng. 41(3), 541–551 (2015).

Shao, Z., Liu, J. & Cheng, Q. Fusion of infrared and visible images based on focus measure operators in the curvelet domain. Appl. Opt. 51(12), 1910–1921 (2011).

Redmon, J. et al. You only look once: Real-time object detection. In Computer Vision & Pattern Recognition, Las Vegas, USA, 27–30 June 2016.

Redmon, J. & Farhadi, A. YOLO9000: Better, faster, stronger. In IEEE Conference on Computer Vision & Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017.

Redmon, J. & Farhadi, A. YOLOv3: An Incremental Improvement. Arxive Preprint, 2018, (1084), 1803-1808

Bochkovskiy, A., Wang, C. Y. & Liao, H. Y. M. Yolov4: Optimal Speed and Accuracy of Object Detection. arXiv preprint arXiv, 2004, 2020 (10934), 1–17.

Woo, S. et al. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV). Munich, Germany, 8–14, September 2018.

Meijing, G. et al. Real-time jellyfish classification and detection based on improved YOLOV3 algorithm. Sensors 21(23), 8160 (2021).

Funding

This research was funded by National Nature Science Foundation of China (61971373) and Hebei Graduate Innovation Funding Project of China (CXZZBS2022148). (Corresponding author: Meijing Gao).

Author information

Authors and Affiliations

Contributions

Conceptualization, M.G.; data curation, N.G.; formal analysis, B.Z.; funding acquisition, M.G.; investigation, M.G.; methodology, S.L.; resources, M.G.; software, Y.D.; supervision, B.Z.; validation, S.L., K.W. and Y.B.; visualization, P.W.; writing—original draft, K.W.; writing—review and editing, S.L., K.W. and Y.B.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gao, M., Li, S., Wang, K. et al. Real-time jellyfish classification and detection algorithm based on improved YOLOv4-tiny and improved underwater image enhancement algorithm. Sci Rep 13, 12989 (2023). https://doi.org/10.1038/s41598-023-39851-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-39851-7

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.